Why is the internet still (mostly) a dumpster fire for kids?

For those that watch the general kids-and-internet space, the last couple of weeks have been pretty busy. Ireland rolled out a framework to allow parents/teachers regulate phones in schools and the UK issued their Codes of Practice for companies to comply with the Online Safety Act. Meanwhile in the US, a Californian District Court ruled that Meta, Alphabet, ByteDance and Snap must face lawsuits alleging they designed their platforms to attract and be addictive to children, thereby harming their mental health. Over the years countless parents and teachers have cornered me with the question: why is the internet STILL such a dumpster fire for kids?

To avoid a barrage of clarification messages, let me be clear that I’m writing this post specifically for parents rather than people who are privacy lawyers and/or trust and safety professionals1.

Let’s begin. TLDR: yes, lots of things are still broken for kids and teens’ online experiences. I used to start a lot of talks with ‘the internet2 was never designed for kids!’ but I wanted to begin here with some things which actually have improved over the last ten years (these are often hard to spot because they’re behind the scenes):

A lot of money has been invested in this problem

The companies behind TikTok, Instagram, Snap, YouTube and other consumer social brands spend literally hundreds of millions of dollars on Trust and Safety teams every year (it’s now an entire professional). While this isn’t exactly a Chief Children’s Officer3, it may be a more scalable long-term solution (if for no other reason that it’s now a standard expense item in most public company earning disclosures). On top of this, about $200m is invested annually into Kidtech and Safetytech startups (more on both below).

It’s not just age-gating any more

Many companies behind the games and services your kids spend time with have become much more thoughtful about young audiences. Roblox enables a range of features for all users and then uses age-verification to enable others. Epic Games has rolled out cabined accounts across its Fortnite platform to allow access to features which don’t require parental consent.

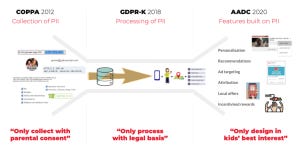

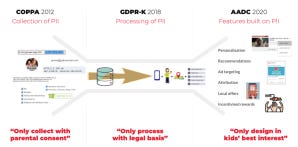

Lots of laws

Ten years ago, there was basically a single law (COPPA) to protect kids online activity (specifically their personally identifiable information or PII). Today there are a multitude, including GDPR-K, Age Appropriate Design Code, Online Safety Act and many others which have moved protections from data privacy to general design.

It’s now easier to build services which support young audiences

We now have industry segments called Kidtech and Safetytech whose startups build products that make it easier for digital consumer services to support kids and teens. In fact Epic Games has made it completely free for any developer to use their parent verification service.

Nearly all parents use parental tools

Apparently 86% (up from 80% in 2022) of parents say they use at least one parental control setting on a gaming device. It is certainly a much larger number that ten years ago.

In short, ten years ago, the internet for kids and teens was a dumpster fire. Today I would categorise it as a smaller dumpster fire. But it is not for the lack of trying or capital. As a parent, you’re probably reading this and going ‘yes that’s great but why can’t anyone actually fix it?’. Here are some of the challenges that developers grapple with and which you should understand the principles of:

Privacy v Safety

Firstly there is the ongoing philosophical debate (and general third rail of all discussion about the internet) between privacy (nobody can look at your private messages) and safety (private messages need to be screened for bad things). I was once in a long argument with a children’s group where I was arguing for a higher age of digital consent (more protection) and they were arguing for a lower age of digital consent (greater rights). It’s tricky and it continues to pervade many of these challenges.

Money

Building features specifically for kids has generally been hampered by the perception that the cost of problem (compliance risk, general safety) does not equate to the price of the solution (money, opportunity cost). Said plainly, kids aren’t considered to be revenue generating so in an internal contest between a feature which will generate revenue and one which won’t, the former usually wins4.

Building parent tools is surprisingly hard

Setting up my son’s first iPhone, I am disappointed with the family controls. Everything seems unnecessarily clunking and often doesn’t work. I don’t have the fine control I want on which apps he wants to install.

Why do no companies even get this close to right?— Peter Hague PhD (@peterrhague) November 18, 2023

There are a few reasons why so many parental tools elicit this kind of response:

Parent tools for an individual app are often trying to simultaneously maintain compatibility with different platform requirements, respond to the laws in different countries (which are not always consistent) and also be useful for the app in question

There are no requirements of User Interface (UI) developers working on parental tools to be parents themselves.

UI designers sometimes design for sophisticated users who spend a lot of time in front of these interfaces whereas many parents absolutely do not and would prefer everything look like Winamp

Parents are extremely time-poor and/or don’t feel this topic is important

Everybody lies

Arguably the single biggest challenge to reducing the size of this problem is reliably identifying the age of a user. The age gate is probably one of the greatest incentives for lying that our civilisation has ever devised. Many of the problems which parents face with their kids digital wellbeing are fundamentally driven by the fact that they can access an environment designed for adults (swerving hundreds of millions of dollars in trust and safety investment) by doing some basic maths5.

Sidebar: This is the part where parents usually ask me to build what amounts to an internet lie detector. And actually the solution is kind of that simple: essentially what the world needs is a Universal Age API6 that developers can access to verify a user’s age. Probably7 the best way to generate this data is to get hardware manufacturers (Apple, Samsung etc.) to make it mandatory for under-13 phone activation (i.e. a parent has to input their kid’s date-of-birth into a device before it gets activated) and then collaborate on the software platform. Easy!8

The most research into age estimation is likely being done by the internet platforms and social networks (though they rarely mention it). We can only do it better if the industry collaborates more. Who among the giants will be first to share their learnings and tools?

— Max Bleyleben (@mbleyleben) April 1, 2021

Despite a lot of headlines (including, actually, this one), the internet for kids and teens is gradually getting better. As somebody who knows far too much about the space, I’m quite surprised how optimistic I am about continued progress. Hopefully this has been a helpful read for parents and teachers to understand some of the context behind the challenges remaining.

For the extremely valuable intersection of these groups, I would love your feedback on this post.

︎

Or, more accurately, the attention economy, which is generally what we really mean.

︎

A role I’ve agitated for over the years.

︎

There are all sorts of exceptions to this statement but I’m talking about a regular, nothing-on-fire, feature planning cycle.

︎

And it’s exactly this behavior which leads to the flywheel of ‘I’m not going to register as a child because I don’t want diluted features’ and ‘I’m not going to build features for kids because they simply don’t show up’.

︎

An Application Programming Interface (API) is a system which lets developers directly access functionality or data in another platform.

︎

There are lots of holes to pick in this but I think it’s the highest probability area for a technical solution. Alex Stamos had an excellent, sadly now deleted, set of tweets about this idea.

︎

Whether the path to a Universal Age API ultimately requires government involvement (if only to allow very large companies to collaborate and not scream ‘anti-trust’) will be interesting to see.

︎